Introduction

This tour is to help those new to Datomic understand Datomic's built-in datalog by providing a simple domain and schema, and by walking through some use cases. For a more complete treatment of Datomic's query capabilities, please take a look at the

documentation.

Reading the Code

To follow along in code, you can download Datomic Free Edition at

http://downloads.datomic.com/free.html, and the sample project at

https://github.com/datomic/day-of-datomic. The code examples should be executed interactively, in the order presented in the article, from a Clojure REPL. The complete code is in the

query_tour.clj file. The

=> prefix indicates responses that is printed by the REPL. The use of ellipsis (

…) in output indicates that a larger result has been truncated for brevity.

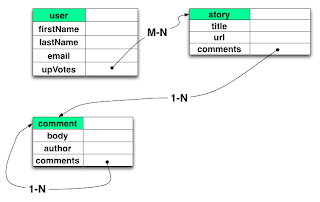

A Simple Schema

The example queries that follow work against a simplified schema that you might use for a social news database, containing users, stories, and comments:

Get Connected

In order to get a database connection, you will need to

require namespaces for the Datomic API and for the news application, then setup a sample database.

Listing 1: Getting Connected

(require

'[datomic.api :as d]

'[datomic.samples.news :as news])

(def uri "datomic:mem://news")

(def conn (news/setup-sample-db-1 uri))

Get a Database Value

The connection from the previous step is already populated with a schema and sample data, but before you query against it, you must get the current

value of the database. Syntactically this is trival:

Listing 2: Getting a Database Value

(def db (d/db conn))

While the syntax of this step is trivial, the semantic implications are deep. Datomic queries do not happen "over there" in some database process, they happen here, in your process's memory space.

A first query

Users in the system are identified by a

:user/email attribute. Lets find all of them:

Listing 3: Finding All Users

(d/q '[:find ?e

:where [?e :user/email]]

db)

=> #{[17592186045424] [17592186045425]}

The large integers are entity ids. Entity ids are auto-assigned, so you may not see the same values on your system. There are several things to note here:

- As query is the most commonly used function in Datomic, it has the terse name d/q.

- The first argument is a query expression. The :where clause indicates what you want to find, "those entities ?e that have a :user/email attribute." The :find clause tells which variables to return.

- The second argument is the database value we obtained in the previous step.

A simple join

Rather than finding all users, let's query for a particular user. This requires joining two query inputs: the database value, and the email you are seeking:

Listing 4: Finding a Specific User

(d/q '[:find ?e

:in $ ?email

:where [?e :user/email ?email]]

db

"editor@example.com")

=> #{[17592186045425]}Notice that there are now three arguments to the query: the query expression plus the two inputs. Also, since there are two inputs, there is now an

:in clause to name the inputs, in the order they appear, e.g.

$ binds the database, and

?email binds "editor@example.com". Names starting with

$ name databases, and names starting with

? name variables.

A database join

The previous join of a database to a single prebound variable may not even look like a join to you – in many databases this operation would be called a parameterized query. So let's look at a more traditional join, that leverages indexes in the database to associate more than one "type" of entity. The following query will find all of the editor's comments:

Listing 5: Finding a User's Comments

(d/q '[:find ?comment

:in $ ?email

:where [?user :user/email ?email]

[?comment :comment/author ?user]]

db

"editor@example.com")

=> #{[17592186045451]

[17592186045450]

... }

Notice that the

:where clause now has two data patterns: one associating users to their emails, and another associating comments to the user that authored them. Because the variable

?user appears in both clauses, the joins on

?user, finding only comments made by the editor.

Aggregates

You can obtain aggregates by changing the

:find clause to include an aggregating function. Instead of finding the editor's comments, let's just

count them:

Listing 6: Returning an Aggregate

(d/q '[:find (count ?comment)

:in $ ?email

:where [?user :user/email ?email]

[?comment :comment/author ?user]]

db

"editor@example.com")

=> [[10]]

More joins

Queries can make many joins. The following query joins comments to their referents, and joins the referents to

:user/email to find any comments that are about people:

Listing 7: Multiple Joins

(d/q '[:find (count ?comment)

:where [?comment :comment/author]

[?commentable :comments ?comment]

[?commentable :user/email]]

db)

=> []

No results is good news, because you don't want to let things get personal by allowing people to comment on other people.

Schema-aware joins

You cannot comment on people, but what kinds of things

can you comment on?

The three slots you have seen in data patterns so far are

entity,

attribute, and

value. Up this point, the

entity and

value positions have typically been variables, but the

attribute has always been constant.

That need not be the case. Here is a query that finds all the attributes of entities that have been commented on:

Listing 8: A Schema Query

(d/q '[:find ?attr-name

:where [?ref :comments]

[?ref ?attr]

[?attr :db/ident ?attr-name]]

db)

=> #{[:story/title]

[:comment/body]

[:story/url]

[:comment/author]

[:comments]}

Schema entities are ordinary entities, like any other data in the system. Rather then return their entity ids (

?attr in the query above), you can join through

:db/ident to find the programmatic identifiers that name each attribute. Judging from these attribute names, there are comments about stories and comments about other comments, exactly what you would expect given the schema.

Entities

In client/server databases where query happens in another process, it is often critical to get the answer and all of its details in a single query. (If you spread the work across multiple steps, you run the risk of the data changing between steps, leading to inconsistent results.)

Since Datomic performs queries in-process, against an immutable database value, it is feasible to decompose the work of query into steps. One very decomposition is to use a query to

find entities, and then to use Datomic's entity API to navigate to the relevant details about those entities.

Let's try it with the editor. First, use a query to find the editor's entity id:

Listing 9: Finding an Entity ID

(def editor-id (->> (d/q '[:find ?e

:in $ ?email

:where [?e :user/email ?email]]

db

"editor@example.com")

ffirst))

Notice that the query returns only the

?e, not any particular attribute values.

Now, you can call

d/entity, passing the database value and the entity id to get the editor entity.

Listing 10: Getting an Entity

(def editor (d/entity db editor-id))

When you first look at an entity, it appears to be a mostly empty map:

Listing 11: A Lazy Entity

editor

=> {:db/id 17592186045425}

That is because entities are

lazy. Their attribute values will appear once you ask for them:

Listing 12: Requesting an Attribute

(:user/firstName editor)

=> "Edward"

If you are feeling more eager, you can

touch an entity to immediately realize all of its attributes:

Listing 13: Touching an Entity

(d/touch editor)

=> {:user/firstName "Edward",

:user/lastName "Itor",

:user/email "editor@example.com",

:db/id 17592186045425}

Are Entities ORM?

No. Entities are used for some of the same purposes that you might use an ORM, but their capabilities are quite different. Entities differ from ORM objects in that the conversion between raw datoms and entities is entirely a mechanical process. There is never any configuration, and relationships are always available and (lazily!) navigable.

Entities also differ from most ORM objects in that relationships can be navigated in either direction. In the previous code example, you saw how the

d/touch method would automatically navigate all outbound relationships from an entity. However, you can also navigate

inbound relationships, by following the convention of prefixing attribute names with an underscore. For example, a user's comments happen to be modeled as a relationship from the comment to the editor. To reach these comments from the editor entity, you can navigate the

:comment/author attribute backwards:

Listing 14: Navigating Backwards

(-> editor :comment/_author)

=> [{:db/id 17592186045441}

{:db/id 17592186045443}

... ]

This process can, of course, be extended as far as you like, e.g. the following example navigates to all the comments people have made on the editor's comments:

Listing 15: Navigating Deeper

(->> editor :comment/_author (mapcat :comments))

=> ({:db/id 17592186045448}

{:db/id 17592186045450}

...)

Time travel

Update-in-place databases can tell you about the present, but most businesses need also to know about the past. Datomic provides this, by allowing you to take a value of a database as of a certain point in time.

Given any datom, there are three time-related pieces of data you can request:

- the transaction entity tx that created the datom

- the relative time, t of the transaction

- the clock time :db/txInstant of the transaction

The transaction entity is available as a fourth optional component of any data pattern. The following query finds the transaction that set the current value for the editor's first name:

Listing 16: Querying for a Transaction

(def txid (->> (d/q '[:find ?tx

:in $ ?e

:where [?e :user/firstName _ ?tx]]

db

editor-id)

ffirst))

Given a transaction id, the

d/tx->t function returns the system-relative time that the transaction happened.

Listing 17: Converting Transaction to T

(d/tx->t txid)

=> 1023

Relative time is useful for "happened-before" type questions, but sometimes you want to know the actual wall clock time. This is stored once per transaction, as the

:db/txInstant property of the

transaction entity:

Listing 18: Getting a Tx Instant

(-> (d/entity (d/db conn) txid) :db/txInstant)

=> #inst "2013-02-20T16:27:11.788-00:00"

Given a

t,

tx, or

txInstant value, you can travel to that point in time with

d/as-of. The example below goes back in time to before the point that the first name Edward was introduced, to see its past value:

Listing 19: Going Back in Time

(def older-db (d/as-of db (dec txid)))

(:user/firstName (d/entity older-db editor-id))

=> "Ed"

The example above shows an

as-of value of the database being used as an argument to

d/entity, but

as-of values can be used anyhere a current database value can be used, including as an argument to query.

Auditing

While

as-of is useful for looking at a moment in the past, you may also want to perform time-spanning queries. This is particularly true in audit scenarios, where you might want a complete history report of some value.

To perform such queries, you can use the

d/history view of a database, which spans all of time. The following query shows the entire history of the editor's

:user/firstName attribute:

Listing 20: Querying Across All Time

(def hist (d/history db))

(->> (d/q '[:find ?tx ?v ?op

:in $ ?e ?attr

:where [?e ?attr ?v ?tx ?op]]

hist

editor-id

:user/firstName)

(sort-by first))

=> ([13194139534319 "Ed" true]

[13194139534335 "Ed" false]

[13194139534335 "Edward" true])

The optional fifth field in a

:where data pattern, named

?op in the example above, matches true if a datom is asserted, or false if it is being retracted.

Transaction 13194139534319 set the editor's first name to "Ed", and transaction 13194139534335 set it to "Edward". The cardinality of

:user/firstName is one, which means that the system will only permit one value of that attribute per entity at any given time. To enforce this constaint, Datomic will automatically retract past values where necessary. Thus the transaction that asserts "Edward" also includes a retraction for the previous value "Ed".

Everything is Data

One of Datomic's design goals is "put declarative power in the hands of developers." Having queries that run in your process goes a long way to meet this objective, but what if you have data that is not in a Datomic database?

Datomic's query engine can run without a database, against arbitrary data structures in your application.

The following example shows the query you began with, this time running against a plain Java list in memory:

Listing 21: Querying Plain Java Data

(d/q '[:find ?e

:where [?e :user/email]]

[[1 :user/email "jdoe@example.com"]

[1 :user/firstName "John"]

[2 :user/email "jane@example.com"]])

=> #{[1] [2]}

The idea behind POJOs (plain old Java objects) is exactly right, and Datomic encourages you to take it one step further, to plain old lists and maps.

..