The new datomic.ion.cast library lets your application produce monitoring data that are integrated with Datomic's support for AWS CloudWatch.

Datomic Cloud and AWS CloudWatch

AWS CloudWatch provides a powerful set of tools for monitoring a software system running on AWS:

- Collect and track CloudWatch Metrics -- variables that measure the behavior of your system.

- Configure CloudWatch Alarms to notify operations or take other automated steps when potential problems arise.

- Monitor, store, and search CloudWatch Logs across all your AWS resources.

- Create CloudWatch Dashboards that provide a single overview for monitoring your systems.

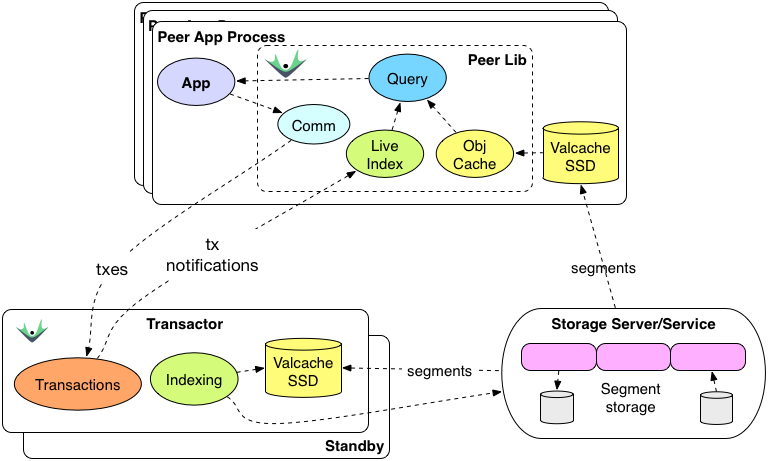

Datomic Cloud is fully integrated with all of these AWS monitoring tools. On the producing side, Datomic creates

metrics and

logs; and on the consuming side, Datomic organizes metrics in custom

dashboards like this Production Dashboard:

|

| Production Dashboard |

datomic.ion.cast

With the introduction of

Datomic Ions, your entire application can run on Datomic Cloud nodes. The

datomic.ion.cast namespace lets Ion application code add your own monitoring data alongside the monitoring data already being produced by Datomic. Cast supports four categories of monitoring data:

- An event is an ordinary occurence that is of interest to an operator, such as start and stop events for a process or activity.

- An alert is an extraordinary occurrence that requires operator intervention, such as the failure of some important process.

- Dev is information of interest only to developers, e.g. fine-grained logging to troubleshoot a problem during development. Dev data can be much higher volume than events or alerts.

- A metric is a numeric value in a named time series, such as the latency for an operation.

To get started using ion.cast, you can

..